|

|

||

|---|---|---|

| backgrounds | ||

| config | ||

| doc | ||

| hosts | ||

| keys | ||

| lib | ||

| modules | ||

| overlays | ||

| .git-blame-ignore-revs | ||

| .gitignore | ||

| .sops.yaml | ||

| README.md | ||

| flake.lock | ||

| flake.nix | ||

| packages.nix | ||

| ssh-public-keys.nix | ||

README.md

C3D2 infrastructure based on NixOS

Setup

Enable nix flakes user wide

Add the setting to the user nix.conf. Only do this once!

echo 'experimental-features = nix-command flakes' >> ~/.config/nix/nix.conf

Enable nix flakes system wide (preferred for NixOS)

add this to your NixOS configuration:

nix.settings.experimental-features = [ "nix-command" "flakes" ];

The secrets repo

is deprecated. Everything should be done through sops. If you don't have secrets access ask sandro or astro to get onboarded.

SSH access

If people should get root access to all machines, their keys should be added to ssh-public-keys.nix.

Deployment

Deploy to a remote NixOS system

For every host that has a nixosConfiguration in our Flake, there are two scripts that can be run for deployment via ssh.

-

nix run .#HOSTNAME-nixos-rebuild switchCopies the current state to build on the target system. This may fail due to resource limits on eg. Raspberry Pis.

-

nix run .#HOSTNAME-nixos-rebuild-local switchBuilds everything locally, then uses

nix copyto transfer the new NixOS system to the target.To use the cache from hydra set the following nix options similar to enabling flakes:

trusted-public-keys = nix-cache.hq.c3d2.de:KZRGGnwOYzys6pxgM8jlur36RmkJQ/y8y62e52fj1ps= trusted-substituters = https://nix-cache.hq.c3d2.deThis can also be set with the

c3d2.addBinaryCacheoption from the c3d2-user-module.

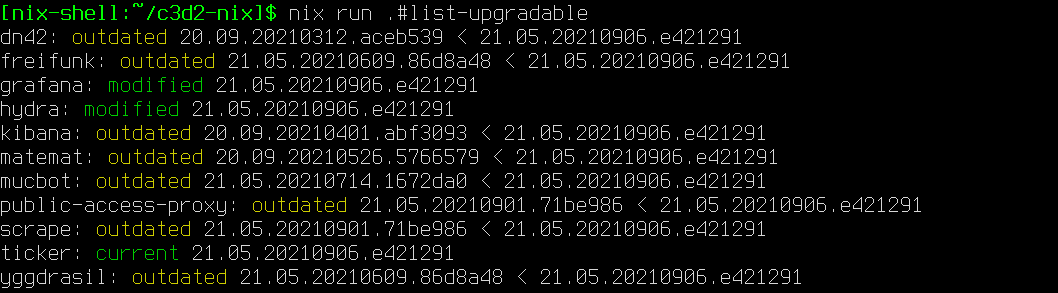

Checking for updates

nix run .#list-upgradable

Checks all hosts with a nixosConfiguration in flake.nix.

Update from Hydra build

The fastest way to update a system, a manual alternative to setting

c3d2.autoUpdate = true;

Just run:

update-from-hydra

Deploy a MicroVM

Build a microvm remotely and deploy

nix run .#microvm-update-HOSTNAME

Build microvm locally and deploy

nix run .#microvm-update-HOSTNAME-local

Update MicroVM from our Hydra

Our Hydra runs nix flake update daily in the updater.timer,

pushing it to the flake-update branch so that it can build fresh

systems. This branch is setup as the source flake in all the MicroVMs,

so the following is all that is needed on a MicroVM-hosting server:

microvm -Ru $hostname

Cluster deployment with Skyflake

About

Skyflake provides Hyperconverged Infrastructure to run NixOS MicroVMs on a cluster. Our setup unifies networking with one bridge per VLAN. Persistent storage is replicated with Cephfs.

Recognize nixosConfiguration for our Skyflake deployment by the

self.nixosModules.cluster-options module being included.

User interface

We use the less-privileged c3d2@ user for deployment. This flake's

name on the cluster is config. Other flakes can coexist in the same

user so that we can run separately developed projects like

dump-dvb. leon and potentially other users can deploy Flakes and

MicroVMs without name clashes.

Deploying

git push this repo to any machine in the cluster, preferably to Hydra because there building won't disturb any services.

You don't deploy all MicroVMs at once. Instead, Skyflake allows you to select NixOS systems by the branches you push to. You must commit before you push!

Example: deploy nixosConfigurations mucbot and sdrweb (HEAD is your

current commit)

git push c3d2@hydra.serv.zentralwerk.org:config HEAD:mucbot HEAD:sdrweb

This will:

- Build the configuration on Hydra, refusing the branch update on broken builds (through a git hook)

- Copy the MicroVM package and its dependencies to the binary cache that is accessible to all nodes with Cephfs

- Submit one job per MicroVM into the Nomad cluster

Deleting a nixosConfiguration's branch will stop the MicroVM in Nomad.

Updating

TODO: how would you like it?

MicroVM status

ssh c3d2@hydra.serv.zentralwerk.org status

Debugging for cluster admins

Nomad

Check the cluster state

nomad server members

Nomad servers coordinate the cluster.

Nomad clients run the tasks.

Browse in the terminal

wander and damon are nice TUIs that are preinstalled on our cluster nodes.

Browse with a browser

First, tunnel TCP port :4646 from a cluster server:

ssh -L 4646:localhost:4646 root@server10.cluster.zentralwerk.org

Then, visit https://localhost:4646 for for full klickibunti.

Reset the Nomad state on a node

After upgrades, Nomad servers may fail rejoining the cluster. Do this to make a Nomad server behave like a newborn:

systemctl stop nomad

rm -rf /var/lib/nomad/server/raft/

systemctl start nomad

Secrets management

Secrets Management Using sops-nix

Adding a new host

Edit .sops.yaml:

- Add an AGE key for this host. Comments in this file tell you how to do it.

- Add a

creation_rulessection forhost/$host/*.yamlfiles

Editing a hosts secrets

Edit .sops.yaml to add files for a new host and its SSH pubkey.

# Get sops

nix develop

# Decrypt, start en EDITOR, encrypt

sops hosts/.../secrets.yaml

# Push

git commit -a -m Adding new secrets

git push origin

Secrets management with PGP

Add your gpg-id to the .gpg-id file in secrets and let somebody reencrypt it for you. Maybe this works for you, maybe not. I did it somehow:

PASSWORD_STORE_DIR=`pwd` tr '\n' ' ' < .gpg-id | xargs -I{} pass init {}

Your gpg key has to have the Authenticate flag set. If not update it and push it to a keyserver and wait. This is necessary, so you can login to any machine with your gpg key.

Laptops / Desktops

This repo could be used in the past as a module. While still technically possible, it is not recommended because the amounts of flake inputs highly increased and the modules are not designed with that in mind.

For end user modules take a look at the c3d2-user-module.

For the deployment options take a look at deployment.

ZFS setup

Set the disko options for the machine and run:

$(nix build --print-out-paths --no-link -L '.#nixosConfigurations.HOSTNAME.config.system.build.disko')